diff --git a/README.md b/README.md

index 8c1d5b920..a418d09f0 100644

--- a/README.md

+++ b/README.md

@@ -147,6 +147,7 @@ PaddleScience 是一个基于深度学习框架 PaddlePaddle 开发的科学计

| 交通预测 | [TGCN 交通流量预测](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/tgcn) | 数据驱动 | GCN & CNN | 监督学习 | [PEMSD4 & PEMSD8](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tgcn/tgcn_data.zip) | - |

| 遥感图像分割 | [UNetFormer 遥感图像分割](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/unetformer) | 数据驱动 | UNetFormer | 监督学习 | [Vaihingen](https://paperswithcode.com/dataset/isprs-vaihingen) | [Paper](https://github.com/WangLibo1995/GeoSeg) |

| 生成模型| [图像生成中的梯度惩罚应用](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/wgan_gp)|数据驱动|WGAN GP|监督学习|[Data1](https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz)

[Data2](http://www.iro.umontreal.ca/~lisa/deep/data/mnist/mnist.pkl.gz)| [Paper](https://github.com/igul222/improved_wgan_training) |

+| 遥感图像分割 | [UTAE 遥感时序语义/全景分割](https://paddlescience-docs.readthedocs.io/zh-cn/latest/zh/examples/UTAE/) | 数据驱动 | UTAE | 监督学习 | [PASTIS](https://zenodo.org/records/5012942) | [Paper](https://arxiv.org/abs/2107.07933) |

diff --git a/docs/index.md b/docs/index.md

index 0fb15089b..d69e8ec80 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -164,6 +164,7 @@

| 遥感图像分割 | [UNetFormer分割图像](./zh/examples/unetformer.md) | 数据驱动 | UNetformer | 监督学习 | [Vaihingen](https://paperswithcode.com/dataset/isprs-vaihingen) | [Paper](https://github.com/WangLibo1995/GeoSeg) |

| 交通预测 | [TGCN 交通流量预测](./zh/examples/tgcn.md) | 数据驱动 | GCN & CNN | 监督学习 | [PEMSD4 & PEMSD8](https://paddle-org.bj.bcebos.com/paddlescience/datasets/tgcn/tgcn_data.zip) | - |

| 生成模型| [图像生成中的梯度惩罚应用](./zh/examples/wgan_gp.md)|数据驱动|WGAN GP|监督学习|[Data1](https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz)

[Data2](http://www.iro.umontreal.ca/~lisa/deep/data/mnist/mnist.pkl.gz)| [Paper](https://github.com/igul222/improved_wgan_training) |

+ | 遥感图像分割 | [UTAE 遥感时序语义/全景分割](./zh/examples/UTAE.md) | 数据驱动 | UTAE | 监督学习 | [PASTIS](https://zenodo.org/records/5012942) | [Paper](https://arxiv.org/abs/2107.07933) |

=== "化学科学"

diff --git a/docs/zh/examples/UTAE.md b/docs/zh/examples/UTAE.md

new file mode 100644

index 000000000..2c40d0684

--- /dev/null

+++ b/docs/zh/examples/UTAE.md

@@ -0,0 +1,142 @@

+# 农作物种植情况实时监测

+

+!!! note

+

+ 运行模型前请在 [PASTIS官网](https://zenodo.org/records/5012942) 中下载PASTIS数据集,并将其放在 `./UTAE/data/` 文件夹下。

+

+=== "模型训练命令"

+

+ ``` sh

+ # 语义分割任务

+ python train_semantic.py \

+ --dataset_folder "./data/PASTIS" \

+ --epochs 100 \

+ --batch_size 2 \

+ --num_workers 0 \

+ --display_step 10

+ # 全景分割任务

+ python train_panoptic.py \

+ --dataset_folder "./data/PASTIS" \

+ --epochs 100 \

+ --batch_size 2 \

+ --num_workers 0 \

+ --warmup 5 \

+ --l_shape 1 \

+ --display_step 10

+ ```

+

+=== "模型评估命令"

+

+ ``` sh

+ # 语义分割任务

+ wget -nc https://paddle-org.bj.bcebos.com/paddlescience/models/utae/semantic.pdparams -P ./pretrained/

+ python test_semantic.py \

+ --weight_file ./pretrained/semantic.pdparams \

+ --dataset_folder "./data/PASTIS" \

+ --device gpu

+ --num_workers 0

+ # 全景分割任务

+ wget -nc https://paddle-org.bj.bcebos.com/paddlescience/models/utae/panoptic.pdparams -P ./pretrained/

+ python test_panoptic.py \

+ --weight_folder ./pretrained/panoptic.pdparams \

+ --dataset_folder ./data/PASTIS \

+ --batch_size 2 \

+ --num_workers 0 \

+ --device gpu

+ ```

+

+| 预训练模型 | 指标 |

+|:--| :--|

+| [语义分割任务](https://paddle-org.bj.bcebos.com/paddlescience/models/utae/semantic.pdparams) | OA (Over all Accuracy): 86.7%

mIoU (mean Intersection over Union): 72.6% |

+| [全景分割任务](https://paddle-org.bj.bcebos.com/paddlescience/models/utae/panoptic.pdparams) | SQ (Segmentation Quality): 83.8

RQ (Recognition Quality): 58.9

PQ (Panoptic Quality): 49.7 |

+

+## 背景简介

+对农作物种植分布和生长状态进行高效、精准的监测,是现代智慧农业和粮食安全领域的核心需求。传统的人工勘察方法耗时费力,而利用单时相卫星影像进行分析的方法,难以应对云层遮挡问题,也无法捕捉作物在整个生长周期中的动态变化规律。

+

+卫星图像时间序列(Satellite Image Time Series, SITS)技术为解决这一难题提供了新的途径。通过持续采集同一区域在不同时间的多光谱影像,SITS数据蕴含了作物从播种、出苗、生长、成熟到收割的全过程光谱和纹理信息。然而,SITS数据具有时序长、维度高、时空关联性强等特点,如何从中高效地提取特征并进行精确的像素级分类(语义分割)是一项重大的技术挑战。

+

+本项目基于模型U-TAE(U-Net Temporal Attention Encoder),利用PaddlePaddle深度学习框架进行实现,旨在构建一个端到端的解决方案,对PASTIS数据集中的卫星影像时间序列进行语义分割,从而实现对多种农作物种植情况的自动化、高精度识别与监测。该技术可广泛应用于农业资源调查、产量预估、灾害评估等领域,具有重要的实用价值。

+

+## 模型原理

+

+本章节仅对U-TAE的模型原理进行简单介绍,详细的理论推导请参考论文:[Panoptic Segmentation of Satellite Image Time Series with Convolutional Temporal Attention Networks](https://arxiv.org/abs/2107.07933)

+

+### 1. 整体结构

+

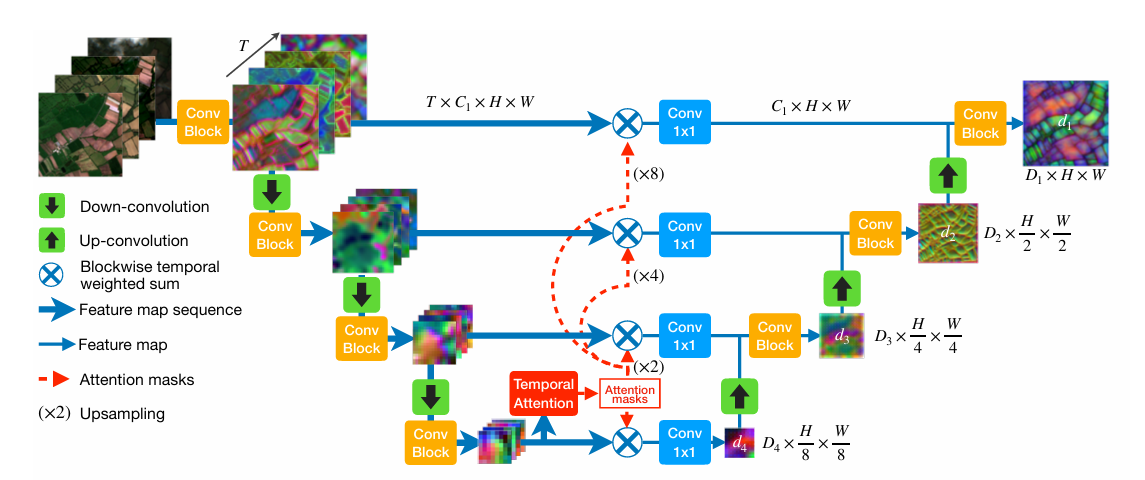

+UTAE(U-Net Temporal Attention Encoder)采用编码器-解码器架构,专为卫星图像时间序列语义分割设计:

+

+- **编码器**:使用轻量化的ResNet-18,提取单时相的空间特征。

+- **解码器**:集成U-TAE模块,利用时间注意力机制聚合多时相的全局上下文信息。

+- **输出**:生成与输入相同分辨率的像素级类别概率图。

+

+

+

+### 2. 时间注意力机制(Temporal Attention)

+

+对于长度为 $T$ 的帧序列,UTAE在解码阶段为每一帧计算帧间相似度权重,实现自适应的时序信息聚合:

+

+- **Query**:当前帧的特征 $\mathbf{Q}$

+- **Key / Value**:全部帧的特征 $\mathbf{K}, \mathbf{V}$

+

+计算步骤如下:

+

+$$

+\text{权重} = \text{Softmax}(\mathbf{Q} \cdot \mathbf{K}^\top)

+$$

+

+然后,利用这些权重对全部帧的特征进行加权求和得到聚合特征:

+

+$$

+\mathbf{F}_{\text{agg}} = \sum_{t=1}^{T} \alpha_t \mathbf{V}_t, \quad \text{其中} \quad \alpha_t = \text{Softmax}(\mathbf{Q} \cdot \mathbf{K}_t^\top)

+$$

+

+该机制能自动抑制云层、阴影等低质量帧,提升作物边界的清晰度。

+

+### 3. 全局-局部注意力块(GLTB)

+

+每个解码器层包含两个并行分支:

+

+- **全局分支**:采用多头自注意力(Multi-Head Self-Attention)机制,建模田块级的长程依赖关系。

+

+- **局部分支**:使用 $3 \times 3$ 深度可分离卷积,注重边缘和细节信息的保留。

+

+两个分支的输出通过逐元素相加融合,既保持全局上下文,又保留局部纹理细节。

+

+### 4. 实时推理优化

+

+为实现高效实时推理,模型采用以下优化策略:

+

+- **轻量级骨干**:ResNet-18,参数量小于12M。

+- **帧间共享权重**:在同一序列中,Key和Value只计算一次,避免重复计算。

+- **滑动窗口推理**:将大图划分为多个块进行逐块推理,确保显存占用恒定。

+

+## 数据集介绍

+

+PASTIS数据集,该数据集由2433个 $10\times128\times128$ 形状的多光谱图像序列组成。每个序列包含2018年9月至2019年11月之间的38至61个观察点,总计超过20亿像素。获取间隔时间不均匀,平均为5天。这种缺乏规律性的现象是由于卫星数据提供商对大量云层覆盖的采集进行了自动过滤。该数据集覆盖4000多平方公里,图像来自法国四个不同地区,气候和作物分布多样。

+数据集可通过 [PASTIS官网](https://zenodo.org/records/5012942) 下载。

+

+## 模型实现

+

+### 模型构建

+

+本案例基于 UTAE(U-TAE) 实现,用 PaddleScience 封装如下:

+

+``` py linenums="12" title="examples/UTAE/src/backbones/utae.py"

+--8<--

+examples/UTAE/src/backbones/utae.py:12:177

+--8<--

+```

+

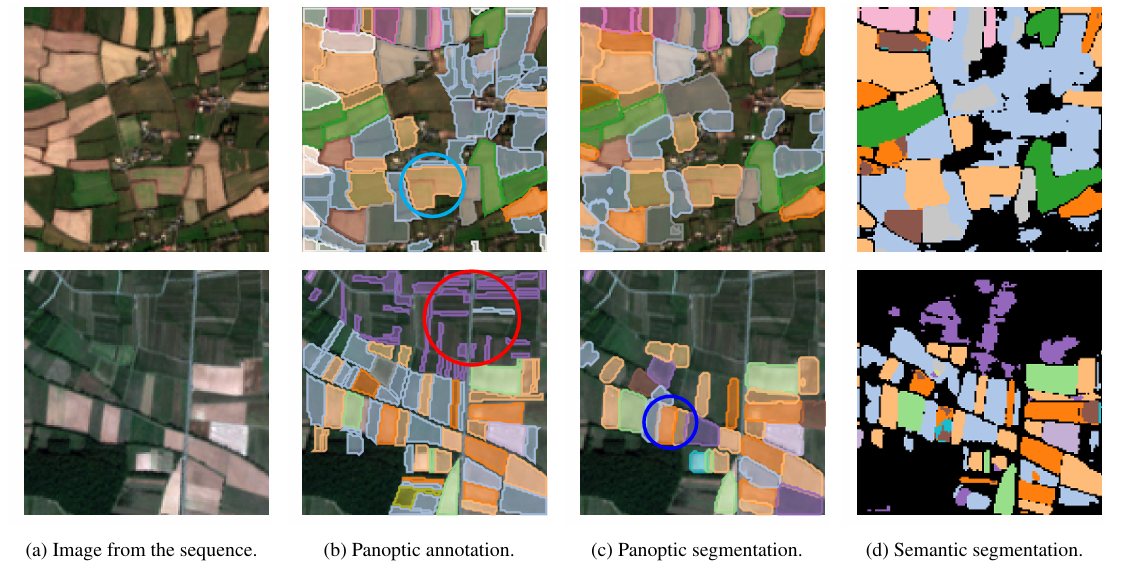

+## 可视化结果

+在 PASTIS 数据集上,本案例复现了全景分割预测与语义分割预测的可视化结果如图所示:

+

+

+

+(a)原始图像 (b)标注(真实标签)(c) 全景分割预测 (d) 语义分割预测

+

+上图展示了 PASTIS 数据集上的农田地块分割结果。在图中用不同颜色表示不同的地块。绿色圈出的位置代表大块地被错误识别为单一地块;红色圈出的位置代表很多细长地块未被正确检测;蓝色圈出的位置展示了 全景分割优于语义分割的情况。模型在区域边界检测方面具有较好表现,尤其在复杂边界的恢复上有所优势。但在面对细长、破碎或复杂地块时,仍然存在挑战,容易导致置信度下降或检测失败。

+## 参考文献

+

+- U-TAE 原论文:[Panoptic Segmentation of Satellite Image Time Series with Convolutional Temporal Attention Networks](https://arxiv.org/abs/2107.07933)

+- 源代码实现:[https://github.com/VSainteuf/utae-paps](https://github.com/VSainteuf/utae-paps)

+- 数据集与基准:[https://github.com/VSainteuf/pastis-benchmark](https://github.com/VSainteuf/pastis-benchmark)

diff --git a/examples/UTAE/src/backbones/convlstm.py b/examples/UTAE/src/backbones/convlstm.py

new file mode 100644

index 000000000..aacf3ba6e

--- /dev/null

+++ b/examples/UTAE/src/backbones/convlstm.py

@@ -0,0 +1,262 @@

+"""

+ConvLSTM Implementation (Paddle Version)

+Converted to PaddlePaddle

+"""

+import paddle

+import paddle.nn as nn

+

+

+class ConvLSTMCell(nn.Layer):

+ """

+ Initialize ConvLSTM cell.

+

+ Parameters

+ ----------

+ input_size: (int, int)

+ Height and width of input tensor as (height, width).

+ input_dim: int

+ Number of channels of input tensor.

+ hidden_dim: int

+ Number of channels of hidden state.

+ kernel_size: (int, int)

+ Size of the convolutional kernel.

+ bias: bool

+ Whether or not to add the bias.

+ """

+

+ def __init__(self, input_size, input_dim, hidden_dim, kernel_size, bias):

+

+ super(ConvLSTMCell, self).__init__()

+ self.height, self.width = input_size

+ self.input_dim = input_dim

+ self.hidden_dim = hidden_dim

+

+ self.kernel_size = kernel_size

+ self.padding = kernel_size[0] // 2, kernel_size[1] // 2

+ self.bias = bias

+

+ self.conv = nn.Conv2D(

+ in_channels=self.input_dim + self.hidden_dim,

+ out_channels=4 * self.hidden_dim,

+ kernel_size=self.kernel_size,

+ padding=self.padding,

+ bias_attr=self.bias,

+ )

+

+ def forward(self, input_tensor, cur_state):

+ h_cur, c_cur = cur_state

+

+ combined = paddle.concat(

+ [input_tensor, h_cur], axis=1

+ ) # concatenate along channel axis

+

+ combined_conv = self.conv(combined)

+ cc_i, cc_f, cc_o, cc_g = paddle.split(combined_conv, self.hidden_dim, axis=1)

+ i = paddle.nn.functional.sigmoid(cc_i)

+ f = paddle.nn.functional.sigmoid(cc_f)

+ o = paddle.nn.functional.sigmoid(cc_o)

+ g = paddle.nn.functional.tanh(cc_g)

+

+ c_next = f * c_cur + i * g

+ h_next = o * paddle.nn.functional.tanh(c_next)

+

+ return h_next, c_next

+

+ def init_hidden(self, batch_size):

+ return (

+ paddle.zeros([batch_size, self.hidden_dim, self.height, self.width]),

+ paddle.zeros([batch_size, self.hidden_dim, self.height, self.width]),

+ )

+

+

+class ConvLSTM(nn.Layer):

+ def __init__(

+ self,

+ input_size,

+ input_dim,

+ hidden_dim,

+ kernel_size,

+ num_layers=1,

+ batch_first=True,

+ bias=True,

+ return_all_layers=False,

+ ):

+ super(ConvLSTM, self).__init__()

+

+ self._check_kernel_size_consistency(kernel_size)

+

+ # Make sure that both `kernel_size` and `hidden_dim` are lists having len == num_layers

+ kernel_size = self._extend_for_multilayer(kernel_size, num_layers)

+ hidden_dim = self._extend_for_multilayer(hidden_dim, num_layers)

+ if not len(kernel_size) == len(hidden_dim) == num_layers:

+ raise ValueError("Inconsistent list length.")

+

+ self.height, self.width = input_size

+

+ self.input_dim = input_dim

+ self.hidden_dim = hidden_dim

+ self.kernel_size = kernel_size

+ self.num_layers = num_layers

+ self.batch_first = batch_first

+ self.bias = bias

+ self.return_all_layers = return_all_layers

+

+ cell_list = []

+ for i in range(0, self.num_layers):

+ cur_input_dim = self.input_dim if i == 0 else self.hidden_dim[i - 1]

+

+ cell_list.append(

+ ConvLSTMCell(

+ input_size=(self.height, self.width),

+ input_dim=cur_input_dim,

+ hidden_dim=self.hidden_dim[i],

+ kernel_size=self.kernel_size[i],

+ bias=self.bias,

+ )

+ )

+

+ self.cell_list = nn.LayerList(cell_list)

+

+ def forward(self, input_tensor, hidden_state=None, pad_mask=None):

+ """

+ Parameters

+ ----------

+ input_tensor: todo

+ 5-D Tensor either of shape (t, b, c, h, w) or (b, t, c, h, w)

+ hidden_state: todo

+ None. todo implement stateful

+ pad_mask (b, t)

+ Returns

+ -------

+ last_state_list, layer_output

+ """

+ if not self.batch_first:

+ # (t, b, c, h, w) -> (b, t, c, h, w)

+ input_tensor = input_tensor.transpose([1, 0, 2, 3, 4])

+

+ # Implement stateful ConvLSTM

+ if hidden_state is not None:

+ raise NotImplementedError()

+ else:

+ hidden_state = self._init_hidden(batch_size=input_tensor.shape[0])

+

+ layer_output_list = []

+ last_state_list = []

+

+ seq_len = input_tensor.shape[1]

+ cur_layer_input = input_tensor

+

+ for layer_idx in range(self.num_layers):

+ h, c = hidden_state[layer_idx]

+ output_inner = []

+ for t in range(seq_len):

+ if pad_mask is not None:

+ # Only process non-padded timesteps

+ mask = ~pad_mask[:, t] # B

+ if mask.any():

+ h_t, c_t = self.cell_list[layer_idx](

+ cur_layer_input[mask, t, :, :, :],

+ cur_state=(h[mask], c[mask]),

+ )

+ h[mask] = h_t

+ c[mask] = c_t

+ else:

+ h, c = self.cell_list[layer_idx](

+ input_tensor=cur_layer_input[:, t, :, :, :], cur_state=[h, c]

+ )

+ output_inner.append(h)

+

+ layer_output = paddle.stack(output_inner, axis=1)

+ cur_layer_input = layer_output

+

+ layer_output_list.append(layer_output)

+ last_state_list.append([h, c])

+

+ if not self.return_all_layers:

+ layer_output_list = layer_output_list[-1:]

+ last_state_list = last_state_list[-1:]

+

+ return layer_output_list, last_state_list

+

+ def _init_hidden(self, batch_size):

+ init_states = []

+ for i in range(self.num_layers):

+ init_states.append(self.cell_list[i].init_hidden(batch_size))

+ return init_states

+

+ @staticmethod

+ def _check_kernel_size_consistency(kernel_size):

+ if not (

+ isinstance(kernel_size, tuple)

+ or (

+ isinstance(kernel_size, list)

+ and all([isinstance(elem, tuple) for elem in kernel_size])

+ )

+ ):

+ raise ValueError("`kernel_size` must be tuple or list of tuples")

+

+ @staticmethod

+ def _extend_for_multilayer(param, num_layers):

+ if not isinstance(param, list):

+ param = [param] * num_layers

+ return param

+

+

+class BConvLSTM(nn.Layer):

+ """Bidirectional ConvLSTM"""

+

+ def __init__(

+ self,

+ input_size,

+ input_dim,

+ hidden_dim,

+ kernel_size,

+ num_layers=1,

+ batch_first=True,

+ bias=True,

+ ):

+ super(BConvLSTM, self).__init__()

+

+ self.forward_net = ConvLSTM(

+ input_size,

+ input_dim,

+ hidden_dim,

+ kernel_size,

+ num_layers,

+ batch_first,

+ bias,

+ return_all_layers=False,

+ )

+ self.backward_net = ConvLSTM(

+ input_size,

+ input_dim,

+ hidden_dim,

+ kernel_size,

+ num_layers,

+ batch_first,

+ bias,

+ return_all_layers=False,

+ )

+

+ def forward(self, input_tensor, pad_mask=None):

+ # Forward pass

+ forward_output, _ = self.forward_net(input_tensor, pad_mask=pad_mask)

+

+ # Backward pass - reverse the sequence

+ reversed_input = paddle.flip(input_tensor, [1]) # Reverse time dimension

+ if pad_mask is not None:

+ reversed_pad_mask = paddle.flip(pad_mask, [1])

+ else:

+ reversed_pad_mask = None

+

+ backward_output, _ = self.backward_net(

+ reversed_input, pad_mask=reversed_pad_mask

+ )

+ backward_output = [

+ paddle.flip(output, [1]) for output in backward_output

+ ] # Reverse back

+

+ # Concatenate forward and backward outputs

+ combined_output = paddle.concat([forward_output[0], backward_output[0]], axis=2)

+

+ return combined_output

diff --git a/examples/UTAE/src/backbones/ltae.py b/examples/UTAE/src/backbones/ltae.py

new file mode 100644

index 000000000..6c0417818

--- /dev/null

+++ b/examples/UTAE/src/backbones/ltae.py

@@ -0,0 +1,222 @@

+import copy

+

+import numpy as np

+import paddle

+import paddle.nn as nn

+from src.backbones.positional_encoding import PositionalEncoder

+

+

+class LTAE2d(nn.Layer):

+ """

+ Lightweight Temporal Attention Encoder (L-TAE) for image time series.

+ Attention-based sequence encoding that maps a sequence of images to a single feature map.

+ A shared L-TAE is applied to all pixel positions of the image sequence.

+ Args:

+ in_channels (int): Number of channels of the input embeddings.

+ n_head (int): Number of attention heads.

+ d_k (int): Dimension of the key and query vectors.

+ mlp (List[int]): Widths of the layers of the MLP that processes the concatenated outputs of the attention heads.

+ dropout (float): dropout

+ d_model (int, optional): If specified, the input tensors will first processed by a fully connected layer

+ to project them into a feature space of dimension d_model.

+ T (int): Period to use for the positional encoding.

+ return_att (bool): If true, the module returns the attention masks along with the embeddings (default False)

+ positional_encoding (bool): If False, no positional encoding is used (default True).

+ """

+

+ def __init__(

+ self,

+ in_channels=128,

+ n_head=16,

+ d_k=4,

+ mlp=[256, 128],

+ dropout=0.2,

+ d_model=256,

+ T=1000,

+ return_att=False,

+ positional_encoding=True,

+ ):

+

+ super(LTAE2d, self).__init__()

+ self.in_channels = in_channels

+ self.mlp = copy.deepcopy(mlp)

+ self.return_att = return_att

+ self.n_head = n_head

+

+ if d_model is not None:

+ self.d_model = d_model

+ self.inconv = nn.Conv1D(in_channels, d_model, 1)

+ else:

+ self.d_model = in_channels

+ self.inconv = None

+ assert self.mlp[0] == self.d_model

+

+ if positional_encoding:

+ self.positional_encoder = PositionalEncoder(

+ self.d_model // n_head, T=T, repeat=n_head

+ )

+ else:

+ self.positional_encoder = None

+

+ self.attention_heads = MultiHeadAttention(

+ n_head=n_head, d_k=d_k, d_in=self.d_model

+ )

+ self.in_norm = nn.GroupNorm(

+ num_groups=n_head,

+ num_channels=self.in_channels,

+ )

+ self.out_norm = nn.GroupNorm(

+ num_groups=n_head,

+ num_channels=mlp[-1],

+ )

+

+ layers = []

+ for i in range(len(self.mlp) - 1):

+ layers.extend(

+ [

+ nn.Linear(self.mlp[i], self.mlp[i + 1]),

+ nn.BatchNorm1D(self.mlp[i + 1]),

+ nn.ReLU(),

+ ]

+ )

+

+ self.mlp = nn.Sequential(*layers)

+ self.dropout = nn.Dropout(dropout)

+

+ def forward(self, x, batch_positions=None, pad_mask=None, return_comp=False):

+ sz_b, seq_len, d, h, w = x.shape

+ if pad_mask is not None:

+ pad_mask = (

+ pad_mask.unsqueeze(-1).tile([1, 1, h]).unsqueeze(-1).tile([1, 1, 1, w])

+ ) # BxTxHxW

+ pad_mask = pad_mask.transpose([0, 2, 3, 1]).reshape([sz_b * h * w, seq_len])

+

+ out = x.transpose([0, 3, 4, 1, 2]).reshape([sz_b * h * w, seq_len, d])

+ out = self.in_norm(out.transpose([0, 2, 1])).transpose([0, 2, 1])

+

+ if self.inconv is not None:

+ out = self.inconv(out.transpose([0, 2, 1])).transpose([0, 2, 1])

+

+ if self.positional_encoder is not None:

+ bp = (

+ batch_positions.unsqueeze(-1)

+ .tile([1, 1, h])

+ .unsqueeze(-1)

+ .tile([1, 1, 1, w])

+ ) # BxTxHxW

+ bp = bp.transpose([0, 2, 3, 1]).reshape([sz_b * h * w, seq_len])

+ out = out + self.positional_encoder(bp)

+

+ out, attn = self.attention_heads(out, pad_mask=pad_mask)

+

+ out = out.transpose([1, 0, 2]).reshape([sz_b * h * w, -1]) # Concatenate heads

+ out = self.dropout(self.mlp(out))

+ out = self.out_norm(out) if self.out_norm is not None else out

+ out = out.reshape([sz_b, h, w, -1]).transpose([0, 3, 1, 2])

+

+ attn = attn.reshape([self.n_head, sz_b, h, w, seq_len]).transpose(

+ [0, 1, 4, 2, 3]

+ ) # head x b x t x h x w

+

+ if self.return_att:

+ return out, attn

+ else:

+ return out

+

+

+class MultiHeadAttention(nn.Layer):

+ """Multi-Head Attention module

+ Modified from github.com/jadore801120/attention-is-all-you-need-pytorch

+ """

+

+ def __init__(self, n_head, d_k, d_in):

+ super().__init__()

+ self.n_head = n_head

+ self.d_k = d_k

+ self.d_in = d_in

+

+ self.Q = self.create_parameter(

+ shape=[n_head, d_k],

+ dtype="float32",

+ default_initializer=nn.initializer.Normal(mean=0.0, std=np.sqrt(2.0 / d_k)),

+ )

+

+ self.fc1_k = nn.Linear(d_in, n_head * d_k)

+ # Initialize weights

+ nn.initializer.Normal(mean=0.0, std=np.sqrt(2.0 / d_k))(self.fc1_k.weight)

+

+ self.attention = ScaledDotProductAttention(

+ temperature=float(np.power(d_k, 0.5))

+ )

+

+ def forward(self, v, pad_mask=None, return_comp=False):

+ d_k, d_in, n_head = self.d_k, self.d_in, self.n_head

+ sz_b, seq_len, _ = v.shape

+

+ q = paddle.stack([self.Q for _ in range(sz_b)], axis=1).reshape(

+ [-1, d_k]

+ ) # (n*b) x d_k

+

+ k = self.fc1_k(v).reshape([sz_b, seq_len, n_head, d_k])

+ k = k.transpose([2, 0, 1, 3]).reshape([-1, seq_len, d_k]) # (n*b) x lk x dk

+

+ if pad_mask is not None:

+ pad_mask = pad_mask.tile(

+ [n_head, 1]

+ ) # replicate pad_mask for each head (nxb) x lk

+

+ # Split v into n_head chunks

+ chunk_size = v.shape[-1] // n_head

+ v_chunks = []

+ for i in range(n_head):

+ start_idx = i * chunk_size

+ end_idx = (i + 1) * chunk_size

+ v_chunks.append(v[:, :, start_idx:end_idx])

+ v = paddle.stack(v_chunks).reshape([n_head * sz_b, seq_len, -1])

+

+ if return_comp:

+ output, attn, comp = self.attention(

+ q, k, v, pad_mask=pad_mask, return_comp=return_comp

+ )

+ else:

+ output, attn = self.attention(

+ q, k, v, pad_mask=pad_mask, return_comp=return_comp

+ )

+ attn = attn.reshape([n_head, sz_b, 1, seq_len])

+ attn = attn.squeeze(axis=2)

+

+ output = output.reshape([n_head, sz_b, 1, d_in // n_head])

+ output = output.squeeze(axis=2)

+

+ if return_comp:

+ return output, attn, comp

+ else:

+ return output, attn

+

+

+class ScaledDotProductAttention(nn.Layer):

+ """Scaled Dot-Product Attention

+ Modified from github.com/jadore801120/attention-is-all-you-need-pytorch

+ """

+

+ def __init__(self, temperature, attn_dropout=0.1):

+ super().__init__()

+ self.temperature = temperature

+ self.dropout = nn.Dropout(attn_dropout)

+ self.softmax = nn.Softmax(axis=2)

+

+ def forward(self, q, k, v, pad_mask=None, return_comp=False):

+ attn = paddle.matmul(q.unsqueeze(1), k.transpose([0, 2, 1]))

+ attn = attn / self.temperature

+ if pad_mask is not None:

+ attn = paddle.where(pad_mask.unsqueeze(1), paddle.to_tensor(-1e3), attn)

+ if return_comp:

+ comp = attn

+ attn = self.softmax(attn)

+ attn = self.dropout(attn)

+ output = paddle.matmul(attn, v)

+

+ if return_comp:

+ return output, attn, comp

+ else:

+ return output, attn

diff --git a/examples/UTAE/src/backbones/positional_encoding.py b/examples/UTAE/src/backbones/positional_encoding.py

new file mode 100644

index 000000000..2b944b56d

--- /dev/null

+++ b/examples/UTAE/src/backbones/positional_encoding.py

@@ -0,0 +1,39 @@

+import paddle

+import paddle.nn as nn

+

+

+class PositionalEncoder(nn.Layer):

+ def __init__(self, d, T=1000, repeat=None, offset=0):

+ super(PositionalEncoder, self).__init__()

+ self.d = d

+ self.T = T

+ self.repeat = repeat

+ self.denom = paddle.pow(

+ paddle.to_tensor(T, dtype="float32"),

+ 2 * (paddle.arange(offset, offset + d, dtype="float32") // 2) / d,

+ )

+ self.updated_location = False

+

+ def forward(self, batch_positions):

+ if not self.updated_location:

+ # Move to same device as input

+ if hasattr(batch_positions, "place"):

+ self.denom = (

+ self.denom.cuda()

+ if "gpu" in str(batch_positions.place)

+ else self.denom.cpu()

+ )

+ self.updated_location = True

+

+ sinusoid_table = (

+ batch_positions[:, :, None].astype("float32") / self.denom[None, None, :]

+ ) # B x T x C

+ sinusoid_table[:, :, 0::2] = paddle.sin(sinusoid_table[:, :, 0::2]) # dim 2i

+ sinusoid_table[:, :, 1::2] = paddle.cos(sinusoid_table[:, :, 1::2]) # dim 2i+1

+

+ if self.repeat is not None:

+ sinusoid_table = paddle.concat(

+ [sinusoid_table for _ in range(self.repeat)], axis=-1

+ )

+

+ return sinusoid_table

diff --git a/examples/UTAE/src/backbones/utae.py b/examples/UTAE/src/backbones/utae.py

new file mode 100644

index 000000000..32ce5ce99

--- /dev/null

+++ b/examples/UTAE/src/backbones/utae.py

@@ -0,0 +1,615 @@

+"""

+U-TAE Implementation (Paddle Version)

+Converted to PaddlePaddle

+"""

+import paddle

+import paddle.nn as nn

+from src.backbones.convlstm import BConvLSTM

+from src.backbones.convlstm import ConvLSTM

+from src.backbones.ltae import LTAE2d

+

+

+class UTAE(nn.Layer):

+ """

+ U-TAE architecture for spatio-temporal encoding of satellite image time series.

+ Args:

+ input_dim (int): Number of channels in the input images.

+ encoder_widths (List[int]): List giving the number of channels of the successive encoder_widths of the convolutional encoder.

+ This argument also defines the number of encoder_widths (i.e. the number of downsampling steps +1)

+ in the architecture.

+ The number of channels are given from top to bottom, i.e. from the highest to the lowest resolution.

+ decoder_widths (List[int], optional): Same as encoder_widths but for the decoder. The order in which the number of

+ channels should be given is also from top to bottom. If this argument is not specified the decoder

+ will have the same configuration as the encoder.

+ out_conv (List[int]): Number of channels of the successive convolutions for the

+ str_conv_k (int): Kernel size of the strided up and down convolutions.

+ str_conv_s (int): Stride of the strided up and down convolutions.

+ str_conv_p (int): Padding of the strided up and down convolutions.

+ agg_mode (str): Aggregation mode for the skip connections. Can either be:

+ - att_group (default) : Attention weighted temporal average, using the same

+ channel grouping strategy as in the LTAE. The attention masks are bilinearly

+ resampled to the resolution of the skipped feature maps.

+ - att_mean : Attention weighted temporal average,

+ using the average attention scores across heads for each date.

+ - mean : Temporal average excluding padded dates.

+ encoder_norm (str): Type of normalisation layer to use in the encoding branch. Can either be:

+ - group : GroupNorm (default)

+ - batch : BatchNorm

+ - instance : InstanceNorm

+ n_head (int): Number of heads in LTAE.

+ d_model (int): Parameter of LTAE

+ d_k (int): Key-Query space dimension

+ encoder (bool): If true, the feature maps instead of the class scores are returned (default False)

+ return_maps (bool): If true, the feature maps instead of the class scores are returned (default False)

+ pad_value (float): Value used by the dataloader for temporal padding.

+ padding_mode (str): Spatial padding strategy for convolutional layers (passed to nn.Conv2D).

+ """

+

+ def __init__(

+ self,

+ input_dim,

+ encoder_widths=[64, 64, 64, 128],

+ decoder_widths=[32, 32, 64, 128],

+ out_conv=[32, 20],

+ str_conv_k=4,

+ str_conv_s=2,

+ str_conv_p=1,

+ agg_mode="att_group",

+ encoder_norm="group",

+ n_head=16,

+ d_model=256,

+ d_k=4,

+ encoder=False,

+ return_maps=False,

+ pad_value=0,

+ padding_mode="reflect",

+ ):

+

+ super(UTAE, self).__init__()

+ self.n_stages = len(encoder_widths)

+ self.return_maps = return_maps

+ self.encoder_widths = encoder_widths

+ self.decoder_widths = decoder_widths

+ self.enc_dim = (

+ decoder_widths[0] if decoder_widths is not None else encoder_widths[0]

+ )

+ self.stack_dim = (

+ sum(decoder_widths) if decoder_widths is not None else sum(encoder_widths)

+ )

+ self.pad_value = pad_value

+ self.encoder = encoder

+ if encoder:

+ self.return_maps = True

+

+ if decoder_widths is not None:

+ assert len(encoder_widths) == len(decoder_widths)

+ assert encoder_widths[-1] == decoder_widths[-1]

+ else:

+ decoder_widths = encoder_widths

+

+ self.in_conv = ConvBlock(

+ nkernels=[input_dim] + [encoder_widths[0], encoder_widths[0]],

+ pad_value=pad_value,

+ norm=encoder_norm,

+ padding_mode=padding_mode,

+ )

+ self.down_blocks = nn.LayerList(

+ [

+ DownConvBlock(

+ d_in=encoder_widths[i],

+ d_out=encoder_widths[i + 1],

+ k=str_conv_k,

+ s=str_conv_s,

+ p=str_conv_p,

+ pad_value=pad_value,

+ norm=encoder_norm,

+ padding_mode=padding_mode,

+ )

+ for i in range(self.n_stages - 1)

+ ]

+ )

+ self.up_blocks = nn.LayerList(

+ [

+ UpConvBlock(

+ d_in=decoder_widths[i],

+ d_out=decoder_widths[i - 1],

+ d_skip=encoder_widths[i - 1],

+ k=str_conv_k,

+ s=str_conv_s,

+ p=str_conv_p,

+ norm="batch",

+ padding_mode=padding_mode,

+ )

+ for i in range(self.n_stages - 1, 0, -1)

+ ]

+ )

+ self.temporal_encoder = LTAE2d(

+ in_channels=encoder_widths[-1],

+ d_model=d_model,

+ n_head=n_head,

+ mlp=[d_model, encoder_widths[-1]],

+ return_att=True,

+ d_k=d_k,

+ )

+ self.temporal_aggregator = Temporal_Aggregator(mode=agg_mode)

+ self.out_conv = ConvBlock(

+ nkernels=[decoder_widths[0]] + out_conv, padding_mode=padding_mode

+ )

+

+ def forward(self, input, batch_positions=None, return_att=False):

+ # Create pad mask by comparing with pad_value

+ # Use safe tensor comparison to avoid type issues

+ pad_value_tensor = paddle.to_tensor(self.pad_value, dtype=input.dtype)

+ comparison = paddle.equal(input, pad_value_tensor)

+

+ # Sequentially reduce dimensions using all()

+ mask_step1 = paddle.all(comparison, axis=-1) # Reduce last dim

+ mask_step2 = paddle.all(mask_step1, axis=-1) # Reduce second-to-last dim

+ pad_mask = paddle.all(mask_step2, axis=-1) # Reduce third-to-last dim (BxT)

+ out = self.in_conv.smart_forward(input)

+ feature_maps = [out]

+ # SPATIAL ENCODER

+ for i in range(self.n_stages - 1):

+ out = self.down_blocks[i].smart_forward(feature_maps[-1])

+ feature_maps.append(out)

+ # TEMPORAL ENCODER

+ out, att = self.temporal_encoder(

+ feature_maps[-1], batch_positions=batch_positions, pad_mask=pad_mask

+ )

+ # SPATIAL DECODER

+ if self.return_maps:

+ maps = [out]

+ for i in range(self.n_stages - 1):

+ skip = self.temporal_aggregator(

+ feature_maps[-(i + 2)], pad_mask=pad_mask, attn_mask=att

+ )

+ out = self.up_blocks[i](out, skip)

+ if self.return_maps:

+ maps.append(out)

+

+ if self.encoder:

+ return out, maps

+ else:

+ out = self.out_conv(out)

+ if return_att:

+ return out, att

+ if self.return_maps:

+ return out, maps

+ else:

+ return out

+

+

+class TemporallySharedBlock(nn.Layer):

+ """

+ Helper module for convolutional encoding blocks that are shared across a sequence.

+ This module adds the self.smart_forward() method the the block.

+ smart_forward will combine the batch and temporal dimension of an input tensor

+ if it is 5-D and apply the shared convolutions to all the (batch x temp) positions.

+ """

+

+ def __init__(self, pad_value=None):

+ super(TemporallySharedBlock, self).__init__()

+ self.out_shape = None

+ self.pad_value = pad_value

+

+ def smart_forward(self, input):

+ if len(input.shape) == 4:

+ return self.forward(input)

+ else:

+ b, t, c, h, w = input.shape

+

+ if self.pad_value is not None:

+ dummy = paddle.zeros(input.shape).astype("float32")

+ self.out_shape = self.forward(dummy.reshape([b * t, c, h, w])).shape

+

+ out = input.reshape([b * t, c, h, w])

+ if self.pad_value is not None:

+ pad_value_tensor = paddle.to_tensor(self.pad_value, dtype=out.dtype)

+ comparison = paddle.equal(out, pad_value_tensor)

+ mask_step1 = paddle.all(comparison, axis=-1) # Reduce last dim

+ mask_step2 = paddle.all(

+ mask_step1, axis=-1

+ ) # Reduce second-to-last dim

+ pad_mask = paddle.all(mask_step2, axis=-1) # Reduce third-to-last dim

+ if pad_mask.any():

+ temp = paddle.ones(self.out_shape) * self.pad_value

+ temp[~pad_mask] = self.forward(out[~pad_mask])

+ out = temp

+ else:

+ out = self.forward(out)

+ else:

+ out = self.forward(out)

+ _, c, h, w = out.shape

+ out = out.reshape([b, t, c, h, w])

+ return out

+

+

+class ConvLayer(nn.Layer):

+ def __init__(

+ self,

+ nkernels,

+ norm="batch",

+ k=3,

+ s=1,

+ p=1,

+ n_groups=4,

+ last_relu=True,

+ padding_mode="reflect",

+ ):

+ super(ConvLayer, self).__init__()

+ layers = []

+ if norm == "batch":

+ nl = nn.BatchNorm2D

+ elif norm == "instance":

+ nl = nn.InstanceNorm2D

+ elif norm == "group":

+ nl = lambda num_feats: nn.GroupNorm(

+ num_channels=num_feats,

+ num_groups=n_groups,

+ )

+ else:

+ nl = None

+ for i in range(len(nkernels) - 1):

+ layers.append(

+ nn.Conv2D(

+ in_channels=nkernels[i],

+ out_channels=nkernels[i + 1],

+ kernel_size=k,

+ padding=p,

+ stride=s,

+ padding_mode=padding_mode,

+ )

+ )

+ if nl is not None:

+ layers.append(nl(nkernels[i + 1]))

+

+ if last_relu:

+ layers.append(nn.ReLU())

+ elif i < len(nkernels) - 2:

+ layers.append(nn.ReLU())

+ self.conv = nn.Sequential(*layers)

+

+ def forward(self, input):

+ return self.conv(input)

+

+

+class ConvBlock(TemporallySharedBlock):

+ def __init__(

+ self,

+ nkernels,

+ pad_value=None,

+ norm="batch",

+ last_relu=True,

+ padding_mode="reflect",

+ ):

+ super(ConvBlock, self).__init__(pad_value=pad_value)

+ self.conv = ConvLayer(

+ nkernels=nkernels,

+ norm=norm,

+ last_relu=last_relu,

+ padding_mode=padding_mode,

+ )

+

+ def forward(self, input):

+ return self.conv(input)

+

+

+class DownConvBlock(TemporallySharedBlock):

+ def __init__(

+ self,

+ d_in,

+ d_out,

+ k,

+ s,

+ p,

+ pad_value=None,

+ norm="batch",

+ padding_mode="reflect",

+ ):

+ super(DownConvBlock, self).__init__(pad_value=pad_value)

+ self.down = ConvLayer(

+ nkernels=[d_in, d_in],

+ norm=norm,

+ k=k,

+ s=s,

+ p=p,

+ padding_mode=padding_mode,

+ )

+ self.conv1 = ConvLayer(

+ nkernels=[d_in, d_out],

+ norm=norm,

+ padding_mode=padding_mode,

+ )

+ self.conv2 = ConvLayer(

+ nkernels=[d_out, d_out],

+ norm=norm,

+ padding_mode=padding_mode,

+ )

+

+ def forward(self, input):

+ out = self.down(input)

+ out = self.conv1(out)

+ out = out + self.conv2(out)

+ return out

+

+

+class UpConvBlock(nn.Layer):

+ def __init__(

+ self, d_in, d_out, k, s, p, norm="batch", d_skip=None, padding_mode="reflect"

+ ):

+ super(UpConvBlock, self).__init__()

+ d = d_out if d_skip is None else d_skip

+ self.skip_conv = nn.Sequential(

+ nn.Conv2D(in_channels=d, out_channels=d, kernel_size=1),

+ nn.BatchNorm2D(d),

+ nn.ReLU(),

+ )

+ self.up = nn.Sequential(

+ nn.Conv2DTranspose(

+ in_channels=d_in, out_channels=d_out, kernel_size=k, stride=s, padding=p

+ ),

+ nn.BatchNorm2D(d_out),

+ nn.ReLU(),

+ )

+ self.conv1 = ConvLayer(

+ nkernels=[d_out + d, d_out], norm=norm, padding_mode=padding_mode

+ )

+ self.conv2 = ConvLayer(

+ nkernels=[d_out, d_out], norm=norm, padding_mode=padding_mode

+ )

+

+ def forward(self, input, skip):

+ out = self.up(input)

+ out = paddle.concat([out, self.skip_conv(skip)], axis=1)

+ out = self.conv1(out)

+ out = out + self.conv2(out)

+ return out

+

+

+class Temporal_Aggregator(nn.Layer):

+ def __init__(self, mode="mean"):

+ super(Temporal_Aggregator, self).__init__()

+ self.mode = mode

+

+ def forward(self, x, pad_mask=None, attn_mask=None):

+ if pad_mask is not None and pad_mask.any():

+ if self.mode == "att_group":

+ n_heads, b, t, h, w = attn_mask.shape

+ attn = attn_mask.reshape([n_heads * b, t, h, w])

+

+ if x.shape[-2] > w:

+ attn = nn.functional.interpolate(

+ attn, size=x.shape[-2:], mode="bilinear", align_corners=False

+ )

+ else:

+ attn = nn.functional.avg_pool2d(attn, kernel_size=w // x.shape[-2])

+

+ attn = attn.reshape([n_heads, b, t, *x.shape[-2:]])

+ attn = attn * (~pad_mask).astype("float32")[None, :, :, None, None]

+

+ # Split x into n_heads chunks along axis 2

+ chunk_size = x.shape[2] // n_heads

+ x_chunks = []

+ for i in range(n_heads):

+ start_idx = i * chunk_size

+ end_idx = (i + 1) * chunk_size

+ x_chunks.append(x[:, :, start_idx:end_idx, :, :])

+ out = paddle.stack(x_chunks) # hxBxTxC/hxHxW

+ out = attn[:, :, :, None, :, :] * out

+ out = out.sum(axis=2) # sum on temporal dim -> hxBxC/hxHxW

+ out = paddle.concat([group for group in out], axis=1) # -> BxCxHxW

+ return out

+ elif self.mode == "att_mean":

+ attn = attn_mask.mean(axis=0) # average over heads -> BxTxHxW

+ attn = nn.functional.interpolate(

+ attn, size=x.shape[-2:], mode="bilinear", align_corners=False

+ )

+ attn = attn * (~pad_mask).astype("float32")[:, :, None, None]

+ out = (x * attn[:, :, None, :, :]).sum(axis=1)

+ return out

+ elif self.mode == "mean":

+ out = x * (~pad_mask).astype("float32")[:, :, None, None, None]

+ out = out.sum(axis=1) / (~pad_mask).sum(axis=1)[:, None, None, None]

+ return out

+ else:

+ if self.mode == "att_group":

+ n_heads, b, t, h, w = attn_mask.shape

+ attn = attn_mask.reshape([n_heads * b, t, h, w])

+ if x.shape[-2] > w:

+ attn = nn.functional.interpolate(

+ attn, size=x.shape[-2:], mode="bilinear", align_corners=False

+ )

+ else:

+ attn = nn.functional.avg_pool2d(attn, kernel_size=w // x.shape[-2])

+ attn = attn.reshape([n_heads, b, t, *x.shape[-2:]])

+ # Split x into n_heads chunks along axis 2

+ chunk_size = x.shape[2] // n_heads

+ x_chunks = []

+ for i in range(n_heads):

+ start_idx = i * chunk_size

+ end_idx = (i + 1) * chunk_size

+ x_chunks.append(x[:, :, start_idx:end_idx, :, :])

+ out = paddle.stack(x_chunks) # hxBxTxC/hxHxW

+ out = attn[:, :, :, None, :, :] * out

+ out = out.sum(axis=2) # sum on temporal dim -> hxBxC/hxHxW

+ out = paddle.concat([group for group in out], axis=1) # -> BxCxHxW

+ return out

+ elif self.mode == "att_mean":

+ attn = attn_mask.mean(axis=0) # average over heads -> BxTxHxW

+ attn = nn.functional.interpolate(

+ attn, size=x.shape[-2:], mode="bilinear", align_corners=False

+ )

+ out = (x * attn[:, :, None, :, :]).sum(axis=1)

+ return out

+ elif self.mode == "mean":

+ return x.mean(axis=1)

+

+

+class RecUNet(nn.Layer):

+ """Recurrent U-Net architecture. Similar to the U-TAE architecture but

+ the L-TAE is replaced by a recurrent network

+ and temporal averages are computed for the skip connections."""

+

+ def __init__(

+ self,

+ input_dim,

+ encoder_widths=[64, 64, 64, 128],

+ decoder_widths=[32, 32, 64, 128],

+ out_conv=[32, 20],

+ str_conv_k=4,

+ str_conv_s=2,

+ str_conv_p=1,

+ temporal="lstm",

+ input_size=128,

+ encoder_norm="group",

+ hidden_dim=128,

+ encoder=False,

+ padding_mode="reflect",

+ pad_value=0,

+ ):

+ super(RecUNet, self).__init__()

+ self.n_stages = len(encoder_widths)

+ self.temporal = temporal

+ self.encoder_widths = encoder_widths

+ self.decoder_widths = decoder_widths

+ self.enc_dim = (

+ decoder_widths[0] if decoder_widths is not None else encoder_widths[0]

+ )

+ self.stack_dim = (

+ sum(decoder_widths) if decoder_widths is not None else sum(encoder_widths)

+ )

+ self.pad_value = pad_value

+

+ self.encoder = encoder

+ if encoder:

+ self.return_maps = True

+ else:

+ self.return_maps = False

+

+ if decoder_widths is not None:

+ assert len(encoder_widths) == len(decoder_widths)

+ assert encoder_widths[-1] == decoder_widths[-1]

+ else:

+ decoder_widths = encoder_widths

+

+ self.in_conv = ConvBlock(

+ nkernels=[input_dim] + [encoder_widths[0], encoder_widths[0]],

+ pad_value=pad_value,

+ norm=encoder_norm,

+ )

+

+ self.down_blocks = nn.LayerList(

+ [

+ DownConvBlock(

+ d_in=encoder_widths[i],

+ d_out=encoder_widths[i + 1],

+ k=str_conv_k,

+ s=str_conv_s,

+ p=str_conv_p,

+ pad_value=pad_value,

+ norm=encoder_norm,

+ padding_mode=padding_mode,

+ )

+ for i in range(self.n_stages - 1)

+ ]

+ )

+ self.up_blocks = nn.LayerList(

+ [

+ UpConvBlock(

+ d_in=decoder_widths[i],

+ d_out=decoder_widths[i - 1],

+ d_skip=encoder_widths[i - 1],

+ k=str_conv_k,

+ s=str_conv_s,

+ p=str_conv_p,

+ norm=encoder_norm,

+ padding_mode=padding_mode,

+ )

+ for i in range(self.n_stages - 1, 0, -1)

+ ]

+ )

+ self.temporal_aggregator = Temporal_Aggregator(mode="mean")

+

+ if temporal == "mean":

+ self.temporal_encoder = Temporal_Aggregator(mode="mean")

+ elif temporal == "lstm":

+ size = int(input_size / str_conv_s ** (self.n_stages - 1))

+ self.temporal_encoder = ConvLSTM(

+ input_dim=encoder_widths[-1],

+ input_size=(size, size),

+ hidden_dim=hidden_dim,

+ kernel_size=(3, 3),

+ )

+ self.out_convlstm = nn.Conv2D(

+ in_channels=hidden_dim,

+ out_channels=encoder_widths[-1],

+ kernel_size=3,

+ padding=1,

+ )

+ elif temporal == "blstm":

+ size = int(input_size / str_conv_s ** (self.n_stages - 1))

+ self.temporal_encoder = BConvLSTM(

+ input_dim=encoder_widths[-1],

+ input_size=(size, size),

+ hidden_dim=hidden_dim,

+ kernel_size=(3, 3),

+ )

+ self.out_convlstm = nn.Conv2D(

+ in_channels=2 * hidden_dim,

+ out_channels=encoder_widths[-1],

+ kernel_size=3,

+ padding=1,

+ )

+ elif temporal == "mono":

+ self.temporal_encoder = None

+ self.out_conv = ConvBlock(

+ nkernels=[decoder_widths[0]] + out_conv, padding_mode=padding_mode

+ )

+

+ def forward(self, input, batch_positions=None):

+ pad_mask = (

+ (input == self.pad_value).all(axis=-1).all(axis=-1).all(axis=-1)

+ ) # BxT pad mask

+

+ out = self.in_conv.smart_forward(input)

+

+ feature_maps = [out]

+ # ENCODER

+ for i in range(self.n_stages - 1):

+ out = self.down_blocks[i].smart_forward(feature_maps[-1])

+ feature_maps.append(out)

+

+ # Temporal encoder

+ if self.temporal == "mean":

+ out = self.temporal_encoder(feature_maps[-1], pad_mask=pad_mask)

+ elif self.temporal == "lstm":

+ _, out = self.temporal_encoder(feature_maps[-1], pad_mask=pad_mask)

+ out = out[0][1] # take last cell state as embedding

+ out = self.out_convlstm(out)

+ elif self.temporal == "blstm":

+ out = self.temporal_encoder(feature_maps[-1], pad_mask=pad_mask)

+ out = self.out_convlstm(out)

+ elif self.temporal == "mono":

+ out = feature_maps[-1]

+

+ if self.return_maps:

+ maps = [out]

+ for i in range(self.n_stages - 1):

+ if self.temporal != "mono":

+ skip = self.temporal_aggregator(

+ feature_maps[-(i + 2)], pad_mask=pad_mask

+ )

+ else:

+ skip = feature_maps[-(i + 2)]

+ out = self.up_blocks[i](out, skip)

+ if self.return_maps:

+ maps.append(out)

+

+ if self.encoder:

+ return out, maps

+ else:

+ out = self.out_conv(out)

+ if self.return_maps:

+ return out, maps

+ else:

+ return out

diff --git a/examples/UTAE/src/dataset.py b/examples/UTAE/src/dataset.py

new file mode 100644

index 000000000..c264d36a1

--- /dev/null

+++ b/examples/UTAE/src/dataset.py

@@ -0,0 +1,291 @@

+import json

+import os

+from datetime import datetime

+

+import geopandas as gpd

+import numpy as np

+import paddle

+import paddle.io as pio

+import pandas as pd

+

+

+class PASTIS_Dataset(pio.Dataset):

+ def __init__(

+ self,

+ folder,

+ norm=True,

+ target="semantic",

+ cache=False,

+ mem16=False,

+ folds=None,

+ reference_date="2018-09-01",

+ class_mapping=None,

+ mono_date=None,

+ sats=["S2"],

+ ):

+

+ super(PASTIS_Dataset, self).__init__()

+ self.folder = folder

+ self.norm = norm

+ self.reference_date = datetime(*map(int, reference_date.split("-")))

+ self.cache = cache

+ self.mem16 = mem16

+ self.mono_date = None

+ if mono_date is not None:

+ self.mono_date = (

+ datetime(*map(int, mono_date.split("-")))

+ if "-" in mono_date

+ else int(mono_date)

+ )

+ self.memory = {}

+ self.memory_dates = {}

+ self.class_mapping = (

+ np.vectorize(lambda x: class_mapping[x])

+ if class_mapping is not None

+ else class_mapping

+ )

+ self.target = target

+ self.sats = sats

+

+ # Get metadata

+ print("Reading patch metadata . . .")

+ self.meta_patch = gpd.read_file(os.path.join(folder, "metadata.geojson"))

+ self.meta_patch.index = self.meta_patch["ID_PATCH"].astype(int)

+ self.meta_patch.sort_index(inplace=True)

+

+ self.date_tables = {s: None for s in sats}

+ self.date_range = np.array(range(-200, 600))

+ for s in sats:

+ dates = self.meta_patch["dates-{}".format(s)]

+ date_table = pd.DataFrame(

+ index=self.meta_patch.index, columns=self.date_range, dtype=int

+ )

+ for pid, date_seq in dates.items():

+ if type(date_seq) == str:

+ date_seq = json.loads(date_seq)

+ d = pd.DataFrame().from_dict(date_seq, orient="index")

+ d = d[0].apply(

+ lambda x: (

+ datetime(int(str(x)[:4]), int(str(x)[4:6]), int(str(x)[6:]))

+ - self.reference_date

+ ).days

+ )

+ date_table.loc[pid, d.values] = 1

+ date_table = date_table.fillna(0)

+ self.date_tables[s] = {

+ index: np.array(list(d.values()))

+ for index, d in date_table.to_dict(orient="index").items()

+ }

+

+ print("Done.")

+

+ # Select Fold samples

+ if folds is not None:

+ self.meta_patch = pd.concat(

+ [self.meta_patch[self.meta_patch["Fold"] == f] for f in folds]

+ )

+

+ self.len = self.meta_patch.shape[0]

+ self.id_patches = self.meta_patch.index

+

+ # Get normalisation values

+ if norm:

+ self.norm = {}

+ for s in self.sats:

+ with open(

+ os.path.join(folder, "NORM_{}_patch.json".format(s)), "r"

+ ) as file:

+ normvals = json.loads(file.read())

+ selected_folds = folds if folds is not None else range(1, 6)

+ means = [normvals["Fold_{}".format(f)]["mean"] for f in selected_folds]

+ stds = [normvals["Fold_{}".format(f)]["std"] for f in selected_folds]

+ self.norm[s] = np.stack(means).mean(axis=0), np.stack(stds).mean(axis=0)

+ self.norm[s] = (

+ paddle.to_tensor(self.norm[s][0], dtype="float32"),

+ paddle.to_tensor(self.norm[s][1], dtype="float32"),

+ )

+ else:

+ self.norm = None

+ print("Dataset ready.")

+

+ def __len__(self):

+ return self.len

+

+ def get_dates(self, id_patch, sat):

+ return self.date_range[np.where(self.date_tables[sat][id_patch] == 1)[0]]

+

+ def __getitem__(self, item):

+ id_patch = self.id_patches[item]

+

+ # Retrieve and prepare satellite data

+ if not self.cache or item not in self.memory.keys():

+ data = {

+ satellite: np.load(

+ os.path.join(

+ self.folder,

+ "DATA_{}".format(satellite),

+ "{}_{}.npy".format(satellite, id_patch),

+ )

+ ).astype(np.float32)

+ for satellite in self.sats

+ } # T x C x H x W arrays

+ data = {s: paddle.to_tensor(a) for s, a in data.items()}

+

+ if self.norm is not None:

+ data = {

+ s: (d - self.norm[s][0][None, :, None, None])

+ / self.norm[s][1][None, :, None, None]

+ for s, d in data.items()

+ }

+

+ if self.target == "semantic":

+ target = np.load(

+ os.path.join(

+ self.folder, "ANNOTATIONS", "TARGET_{}.npy".format(id_patch)

+ )

+ )

+ target = paddle.to_tensor(target[0].astype(int))

+

+ if self.class_mapping is not None:

+ target = self.class_mapping(target)

+

+ elif self.target == "instance":

+ heatmap = np.load(

+ os.path.join(

+ self.folder,

+ "INSTANCE_ANNOTATIONS",

+ "HEATMAP_{}.npy".format(id_patch),

+ )

+ )

+

+ instance_ids = np.load(

+ os.path.join(

+ self.folder,

+ "INSTANCE_ANNOTATIONS",

+ "INSTANCES_{}.npy".format(id_patch),

+ )

+ )

+ pixel_to_object_mapping = np.load(

+ os.path.join(

+ self.folder,

+ "INSTANCE_ANNOTATIONS",

+ "ZONES_{}.npy".format(id_patch),

+ )

+ )

+

+ pixel_semantic_annotation = np.load(

+ os.path.join(

+ self.folder, "ANNOTATIONS", "TARGET_{}.npy".format(id_patch)

+ )

+ )

+

+ if self.class_mapping is not None:

+ pixel_semantic_annotation = self.class_mapping(

+ pixel_semantic_annotation[0]

+ )

+ else:

+ pixel_semantic_annotation = pixel_semantic_annotation[0]

+

+ size = np.zeros((*instance_ids.shape, 2))

+ object_semantic_annotation = np.zeros(instance_ids.shape)

+ for instance_id in np.unique(instance_ids):

+ if instance_id != 0:

+ h = (instance_ids == instance_id).any(axis=-1).sum()

+ w = (instance_ids == instance_id).any(axis=-2).sum()

+ size[pixel_to_object_mapping == instance_id] = (h, w)

+ object_semantic_annotation[

+ pixel_to_object_mapping == instance_id

+ ] = pixel_semantic_annotation[instance_ids == instance_id][0]

+

+ target = paddle.to_tensor(

+ np.concatenate(

+ [

+ heatmap[:, :, None], # 0

+ instance_ids[:, :, None], # 1

+ pixel_to_object_mapping[:, :, None], # 2

+ size, # 3-4

+ object_semantic_annotation[:, :, None], # 5

+ pixel_semantic_annotation[:, :, None], # 6

+ ],

+ axis=-1,

+ ),

+ dtype="float32",

+ )

+

+ if self.cache:

+ if self.mem16:

+ self.memory[item] = [

+ {k: v.astype("float16") for k, v in data.items()},

+ target,

+ ]

+ else:

+ self.memory[item] = [data, target]

+

+ else:

+ data, target = self.memory[item]

+ if self.mem16:

+ data = {k: v.astype("float32") for k, v in data.items()}

+

+ # Retrieve date sequences

+ if not self.cache or id_patch not in self.memory_dates.keys():

+ dates = {

+ s: paddle.to_tensor(self.get_dates(id_patch, s)) for s in self.sats

+ }

+ if self.cache:

+ self.memory_dates[id_patch] = dates

+ else:

+ dates = self.memory_dates[id_patch]

+

+ if self.mono_date is not None:

+ if isinstance(self.mono_date, int):

+ data = {s: data[s][self.mono_date].unsqueeze(0) for s in self.sats}

+ dates = {s: dates[s][self.mono_date] for s in self.sats}

+ else:

+ mono_delta = (self.mono_date - self.reference_date).days

+ mono_date = {

+ s: int((dates[s] - mono_delta).abs().argmin()) for s in self.sats

+ }

+ data = {s: data[s][mono_date[s]].unsqueeze(0) for s in self.sats}

+ dates = {s: dates[s][mono_date[s]] for s in self.sats}

+

+ if self.mem16:

+ data = {k: v.astype("float32") for k, v in data.items()}

+

+ if len(self.sats) == 1:

+ data = data[self.sats[0]]

+ dates = dates[self.sats[0]]

+

+ return (data, dates), target

+

+

+def prepare_dates(date_dict, reference_date):

+ d = pd.DataFrame().from_dict(date_dict, orient="index")

+ d = d[0].apply(

+ lambda x: (

+ datetime(int(str(x)[:4]), int(str(x)[4:6]), int(str(x)[6:]))

+ - reference_date

+ ).days

+ )

+ return d.values

+

+

+def compute_norm_vals(folder, sat):

+ norm_vals = {}

+ for fold in range(1, 6):

+ dt = PASTIS_Dataset(folder=folder, norm=False, folds=[fold], sats=[sat])

+ means = []

+ stds = []

+ for i, b in enumerate(dt):

+ print("{}/{}".format(i, len(dt)), end="\r")

+ data = b[0][0][sat] # T x C x H x W

+ data = data.transpose([1, 0, 2, 3]) # C x T x H x W

+ means.append(data.reshape([data.shape[0], -1]).mean(axis=-1).numpy())

+ stds.append(data.reshape([data.shape[0], -1]).std(axis=-1).numpy())

+

+ mean = np.stack(means).mean(axis=0).astype(float)

+ std = np.stack(stds).mean(axis=0).astype(float)

+

+ norm_vals["Fold_{}".format(fold)] = dict(mean=list(mean), std=list(std))

+

+ with open(os.path.join(folder, "NORM_{}_patch.json".format(sat)), "w") as file:

+ file.write(json.dumps(norm_vals, indent=4))

diff --git a/examples/UTAE/src/learning/metrics.py b/examples/UTAE/src/learning/metrics.py

new file mode 100644

index 000000000..d292374b5

--- /dev/null

+++ b/examples/UTAE/src/learning/metrics.py

@@ -0,0 +1,53 @@

+"""

+Metrics utilities (Paddle Version)

+"""

+import numpy as np

+

+"""

+Compute per-class and overall metrics from confusion matrix

+"""

+

+

+def confusion_matrix_analysis(cm):

+

+ n_classes = cm.shape[0]

+

+ # Overall accuracy

+ acc = np.diag(cm).sum() / (cm.sum() + 1e-15)

+

+ # Per-class metrics

+ per_class_metrics = {}

+ ious = []

+

+ for i in range(n_classes):

+ # True positives, false positives, false negatives

+ tp = cm[i, i]

+ fp = cm[:, i].sum() - tp

+ fn = cm[i, :].sum() - tp

+

+ # Precision, recall, F1

+ precision = tp / (tp + fp + 1e-15)

+ recall = tp / (tp + fn + 1e-15)

+ f1 = 2 * precision * recall / (precision + recall + 1e-15)

+

+ # IoU

+ union = tp + fp + fn

+ iou = tp / (union + 1e-15)

+ ious.append(iou)

+

+ per_class_metrics[f"class_{i}"] = {

+ "precision": precision,

+ "recall": recall,

+ "f1": f1,

+ "iou": iou,

+ }

+

+ # Mean metrics

+ mean_iou = np.mean(ious)

+

+ return {

+ "overall_accuracy": acc,

+ "mean_iou": mean_iou,

+ "per_class": per_class_metrics,

+ "confusion_matrix": cm.tolist(),

+ }

diff --git a/examples/UTAE/src/learning/miou.py b/examples/UTAE/src/learning/miou.py

new file mode 100644

index 000000000..622e61f63

--- /dev/null

+++ b/examples/UTAE/src/learning/miou.py

@@ -0,0 +1,78 @@

+"""

+IoU metric computation (Paddle Version)

+"""

+import numpy as np

+import paddle

+

+

+class IoU:

+ def __init__(self, num_classes, ignore_index=-1, cm_device="cpu"):

+ self.num_classes = num_classes

+ self.ignore_index = ignore_index

+ self.cm_device = cm_device

+ self.confusion_matrix = np.zeros((num_classes, num_classes))

+

+ """

+ Add predictions and targets to confusion matrix

+ """

+

+ def add(self, pred, target):

+ # Convert to numpy if tensors

+ if isinstance(pred, paddle.Tensor):

+ pred = pred.cpu().numpy()

+ if isinstance(target, paddle.Tensor):

+ target = target.cpu().numpy()

+

+ # Flatten arrays

+ pred = pred.flatten()

+ target = target.flatten()

+

+ # Remove ignore index

+ if self.ignore_index is not None:

+ mask = target != self.ignore_index

+ pred = pred[mask]

+ target = target[mask]

+

+ # Compute confusion matrix

+ for t, p in zip(target.flatten(), pred.flatten()):

+ if 0 <= t < self.num_classes and 0 <= p < self.num_classes:

+ self.confusion_matrix[t, p] += 1

+

+ """

+ Get mean IoU and accuracy from confusion matrix

+ """

+

+ def get_miou_acc(self):

+

+ # Overall accuracy

+ acc = np.diag(self.confusion_matrix).sum() / (

+ self.confusion_matrix.sum() + 1e-15

+ )

+

+ # Per-class IoU

+ ious = []

+ for i in range(self.num_classes):

+ intersection = self.confusion_matrix[i, i]

+ union = (

+ self.confusion_matrix[i, :].sum()

+ + self.confusion_matrix[:, i].sum()

+ - intersection

+ )

+

+ if union > 0:

+ ious.append(intersection / union)

+ else:

+ ious.append(0.0)

+

+ # Mean IoU

+ miou = np.mean(ious)

+

+ return miou, acc

+

+ """

+ Reset confusion matrix

+ """

+

+ def reset(self):

+

+ self.confusion_matrix.fill(0)

diff --git a/examples/UTAE/src/learning/weight_init.py b/examples/UTAE/src/learning/weight_init.py

new file mode 100644

index 000000000..b79c04f4f

--- /dev/null

+++ b/examples/UTAE/src/learning/weight_init.py

@@ -0,0 +1,24 @@

+"""

+Weight initialization utilities (Paddle Version)

+"""

+import paddle.nn as nn

+

+"""

+Initialize model weights

+"""

+

+

+def weight_init(model):

+

+ for layer in model.sublayers():

+ if isinstance(layer, (nn.Conv2D, nn.Conv1D)):

+ nn.initializer.XavierUniform()(layer.weight)

+ if layer.bias is not None:

+ nn.initializer.Constant(0.0)(layer.bias)

+ elif isinstance(layer, (nn.BatchNorm2D, nn.BatchNorm1D, nn.GroupNorm)):

+ nn.initializer.Constant(1.0)(layer.weight)

+ nn.initializer.Constant(0.0)(layer.bias)

+ elif isinstance(layer, nn.Linear):

+ nn.initializer.XavierUniform()(layer.weight)

+ if layer.bias is not None:

+ nn.initializer.Constant(0.0)(layer.bias)

diff --git a/examples/UTAE/src/model_utils.py b/examples/UTAE/src/model_utils.py

new file mode 100644

index 000000000..bda3eac5a

--- /dev/null

+++ b/examples/UTAE/src/model_utils.py

@@ -0,0 +1,109 @@

+"""

+Model utilities (Paddle Version)

+"""

+from src.backbones.utae import UTAE

+from src.backbones.utae import RecUNet

+

+"""

+Get the model based on configuration

+"""

+

+

+def get_model(config, mode="semantic"):

+

+ if mode == "panoptic":

+ # For panoptic segmentation, create PaPs model

+ if config.backbone == "utae":

+ from src.panoptic.paps import PaPs

+

+ encoder = UTAE(

+ input_dim=10, # PASTIS has 10 spectral bands

+ encoder_widths=eval(config.encoder_widths),

+ decoder_widths=eval(config.decoder_widths),

+ out_conv=eval(config.out_conv),

+ str_conv_k=config.str_conv_k,

+ str_conv_s=config.str_conv_s,

+ str_conv_p=config.str_conv_p,

+ agg_mode=config.agg_mode,

+ encoder_norm=config.encoder_norm,

+ n_head=config.n_head,

+ d_model=config.d_model,

+ d_k=config.d_k,

+ encoder=True, # Important: set to True for PaPs

+ return_maps=True, # Important: return feature maps

+ pad_value=config.pad_value,

+ padding_mode=config.padding_mode,

+ )

+

+ model = PaPs(

+ encoder=encoder,

+ num_classes=config.num_classes,

+ shape_size=config.shape_size,

+ mask_conv=config.mask_conv,

+ min_confidence=config.min_confidence,

+ min_remain=config.min_remain,

+ mask_threshold=config.mask_threshold,

+ )

+ else:

+ raise NotImplementedError(

+ f"Backbone {config.backbone} not implemented for panoptic mode"

+ )

+ elif config.model == "utae":

+ model = UTAE(

+ input_dim=10, # Sentinel-2 has 10 bands

+ encoder_widths=eval(config.encoder_widths),

+ decoder_widths=eval(config.decoder_widths),

+ out_conv=eval(config.out_conv),

+ str_conv_k=config.str_conv_k,

+ str_conv_s=config.str_conv_s,

+ str_conv_p=config.str_conv_p,

+ agg_mode=config.agg_mode,

+ encoder_norm=config.encoder_norm,

+ n_head=config.n_head,

+ d_model=config.d_model,

+ d_k=config.d_k,

+ pad_value=config.pad_value,

+ padding_mode=config.padding_mode,

+ )

+ elif config.model == "uconvlstm":

+ model = RecUNet(

+ input_dim=10,

+ encoder_widths=eval(config.encoder_widths),

+ decoder_widths=eval(config.decoder_widths),

+ out_conv=eval(config.out_conv),

+ str_conv_k=config.str_conv_k,

+ str_conv_s=config.str_conv_s,

+ str_conv_p=config.str_conv_p,

+ temporal="lstm",

+ encoder_norm=config.encoder_norm,

+ padding_mode=config.padding_mode,

+ pad_value=config.pad_value,

+ )

+ elif config.model == "buconvlstm":

+ model = RecUNet(

+ input_dim=10,

+ encoder_widths=eval(config.encoder_widths),

+ decoder_widths=eval(config.decoder_widths),

+ out_conv=eval(config.out_conv),

+ str_conv_k=config.str_conv_k,

+ str_conv_s=config.str_conv_s,

+ str_conv_p=config.str_conv_p,

+ temporal="blstm",

+ encoder_norm=config.encoder_norm,

+ padding_mode=config.padding_mode,

+ pad_value=config.pad_value,

+ )

+ else:

+ raise ValueError(f"Unknown model: {config.model}")

+

+ return model

+

+

+"""

+Get number of trainable parameters

+"""

+

+

+def get_ntrainparams(model):

+

+ return sum(p.numel() for p in model.parameters() if not p.stop_gradient)

diff --git a/examples/UTAE/src/panoptic/FocalLoss.py b/examples/UTAE/src/panoptic/FocalLoss.py

new file mode 100644

index 000000000..ab8261115

--- /dev/null

+++ b/examples/UTAE/src/panoptic/FocalLoss.py

@@ -0,0 +1,58 @@

+"""

+Converted to PaddlePaddle

+"""

+import paddle

+import paddle.nn as nn

+import paddle.nn.functional as F

+

+

+class FocalLoss(nn.Layer):

+ def __init__(self, gamma=0, alpha=None, size_average=True, ignore_label=None):

+ super(FocalLoss, self).__init__()

+ self.gamma = gamma

+ self.alpha = alpha

+ if isinstance(alpha, (float, int)):

+ self.alpha = paddle.to_tensor([alpha, 1 - alpha])

+ if isinstance(alpha, list):

+ self.alpha = paddle.to_tensor(alpha)

+ self.size_average = size_average

+ self.ignore_label = ignore_label

+

+ def forward(self, input, target):

+ if input.ndim > 2:

+ input = input.reshape(

+ [input.shape[0], input.shape[1], -1]

+ ) # N,C,H,W => N,C,H*W

+ input = input.transpose([0, 2, 1]) # N,C,H*W => N,H*W,C

+ input = input.reshape([-1, input.shape[2]]) # N,H*W,C => N*H*W,C

+ target = target.reshape([-1, 1])

+

+ if input.squeeze(1).ndim == 1:

+ logpt = F.sigmoid(input)

+ logpt = logpt.flatten()

+ else:

+ logpt = F.log_softmax(input, axis=-1)

+ logpt = paddle.gather_nd(

+ logpt,

+ paddle.stack([paddle.arange(logpt.shape[0]), target.squeeze()], axis=1),

+ )

+ logpt = logpt.flatten()

+

+ pt = paddle.exp(logpt)

+

+ if self.alpha is not None:

+ if self.alpha.dtype != input.dtype:

+ self.alpha = self.alpha.astype(input.dtype)

+ at = paddle.gather(self.alpha, target.flatten().astype("int64"))

+ logpt = logpt * at

+

+ loss = -1 * (1 - pt) ** self.gamma * logpt

+

+ if self.ignore_label is not None:

+ valid_mask = target[:, 0] != self.ignore_label

+ loss = loss[valid_mask]

+

+ if self.size_average:

+ return loss.mean()

+ else:

+ return loss.sum()

diff --git a/examples/UTAE/src/panoptic/geom_utils.py b/examples/UTAE/src/panoptic/geom_utils.py

new file mode 100644

index 000000000..03b9bddc6

--- /dev/null

+++ b/examples/UTAE/src/panoptic/geom_utils.py

@@ -0,0 +1,75 @@

+"""

+Geometric utilities (Paddle Version)

+Converted to PaddlePaddle

+"""

+

+import numpy as np

+import paddle

+

+

+def get_bbox(bin_mask):

+ """Input single (H,W) bin mask"""

+ if isinstance(bin_mask, paddle.Tensor):

+ xl, xr = paddle.nonzero(bin_mask.sum(axis=-2))[0][[0, -1]]

+ yt, yb = paddle.nonzero(bin_mask.sum(axis=-1))[0][[0, -1]]

+ return paddle.stack([xl, yt, xr, yb])

+ else:

+ xl, xr = np.where(bin_mask.sum(axis=-2))[0][[0, -1]]

+ yt, yb = np.where(bin_mask.sum(axis=-1))[0][[0, -1]]

+ return np.stack([xl, yt, xr, yb])

+

+

+def bbox_area(bbox):

+ """Input (N,4) set of bounding boxes"""

+ out = bbox.astype("float32")

+ return (out[:, 2] - out[:, 0]) * (out[:, 3] - out[:, 1])

+

+

+def intersect(box_a, box_b):

+ """

+ taken from https://github.com/amdegroot/ssd.pytorch

+ We resize both tensors to [A,B,2] without new malloc:

+ [A,2] -> [A,1,2] -> [A,B,2]

+ [B,2] -> [1,B,2] -> [A,B,2]

+ Then we compute the area of intersect between box_a and box_b.

+ Args:

+ box_a: (tensor) bounding boxes, Shape: [A,4].

+ box_b: (tensor) bounding boxes, Shape: [B,4].

+ Return:

+ (tensor) intersection area, Shape: [A,B].

+ """

+ A = box_a.shape[0]

+ B = box_b.shape[0]

+ max_xy = paddle.minimum(

+ box_a[:, 2:].unsqueeze(1).expand([A, B, 2]),

+ box_b[:, 2:].unsqueeze(0).expand([A, B, 2]),

+ )

+ min_xy = paddle.maximum(

+ box_a[:, :2].unsqueeze(1).expand([A, B, 2]),

+ box_b[:, :2].unsqueeze(0).expand([A, B, 2]),

+ )

+ inter = paddle.clip((max_xy - min_xy), min=0)

+ return inter[:, :, 0] * inter[:, :, 1]

+

+

+def bbox_iou(bbox1, bbox2):

+ """Two sets of (N,4) bounding boxes"""

+ area1 = bbox_area(bbox1)

+ area2 = bbox_area(bbox2)

+ inter = paddle.diag(intersect(bbox1, bbox2))

+ union = area1 + area2 - inter

+ valid_mask = union != 0

+ return inter[valid_mask] / union[valid_mask]

+

+

+def bbox_validzone(bbox, shape):

+ """Given an image shape, get the coordinate (in the bbox reference)

+ of the pixels that are within the image boundaries"""

+ H, W = shape

+ wt, ht, wb, hb = bbox

+

+ val_ht = -ht if ht < 0 else 0

+ val_wt = -wt if wt < 0 else 0

+ val_hb = H - ht if hb > H else hb - ht

+ val_wb = W - wt if wb > W else wb - wt

+ return (val_wt, val_ht, val_wb, val_hb)

diff --git a/examples/UTAE/src/panoptic/metrics.py b/examples/UTAE/src/panoptic/metrics.py

new file mode 100644

index 000000000..e410aea4c

--- /dev/null

+++ b/examples/UTAE/src/panoptic/metrics.py

@@ -0,0 +1,201 @@

+"""

+Panoptic Metrics (Paddle Version)

+Converted to PaddlePaddle

+"""

+

+import paddle

+

+

+class PanopticMeter:

+ """

+ Meter class for the panoptic metrics as defined by Kirilov et al. :

+ Segmentation Quality (SQ)

+ Recognition Quality (RQ)

+ Panoptic Quality (PQ)

+ The behavior of this meter mimics that of torchnet meters, each predicted batch

+ is added via the add method and the global metrics are retrieved with the value

+ method.

+ Args:

+ num_classes (int): Number of semantic classes (including background and void class).

+ void_label (int): Label for the void class (default 19).

+ background_label (int): Label for the background class (default 0).

+ iou_threshold (float): Threshold used on the IoU of the true vs predicted

+ instance mask. Above the threshold a true instance is counted as True Positive.

+ """

+

+ def __init__(

+ self, num_classes=20, background_label=0, void_label=19, iou_threshold=0.5

+ ):

+

+ self.num_classes = num_classes

+ self.iou_threshold = iou_threshold

+ self.class_list = [c for c in range(num_classes) if c != background_label]

+ self.void_label = void_label

+ if void_label is not None:

+ self.class_list = [c for c in self.class_list if c != void_label]

+ self.counts = paddle.zeros([len(self.class_list), 3])

+ self.cumulative_ious = paddle.zeros([len(self.class_list)])

+

+ def add(self, predictions, target):

+ # Split target tensor - equivalent to torch.split

+ target_splits = paddle.split(target, [1, 1, 1, 2, 1, 1], axis=-1)

+ (

+ target_heatmap,

+ true_instances,

+ zones,

+ size,

+ sem_obj,

+ sem_pix,

+ ) = target_splits

+

+ instance_true = true_instances.squeeze(-1)

+ semantic_true = sem_pix.squeeze(-1)

+

+ instance_pred = predictions["pano_instance"]

+

+ # Handle case when pano_semantic is None (when pseudo_nms=False)

+ if predictions["pano_semantic"] is not None:

+ semantic_pred = predictions["pano_semantic"].argmax(axis=1)

+ else:

+ # Return early with zero metrics when no panoptic predictions available

+ return

+

+ if self.void_label is not None:

+ void_masks = (semantic_true == self.void_label).astype("float32")

+

+ # Ignore Void Objects

+ for batch_idx in range(void_masks.shape[0]):

+ void_mask = void_masks[batch_idx]

+ if void_mask.any():

+ void_instances = instance_true[batch_idx] * void_mask

+ unique_void, void_counts = paddle.unique(

+ void_instances, return_counts=True

+ )

+

+ for void_inst_id, void_inst_area in zip(unique_void, void_counts):

+ if void_inst_id == 0:

+ continue

+

+ pred_instances = instance_pred[batch_idx]

+ unique_pred, pred_counts = paddle.unique(

+ pred_instances, return_counts=True

+ )

+

+ for pred_inst_id, pred_inst_area in zip(

+ unique_pred, pred_counts

+ ):

+ if pred_inst_id == 0:

+ continue

+ inter = (

+ (instance_true[batch_idx] == void_inst_id)

+ * (instance_pred[batch_idx] == pred_inst_id)

+ ).sum()

+ iou = inter.astype("float32") / (

+ void_inst_area + pred_inst_area - inter

+ ).astype("float32")

+ if iou > self.iou_threshold:

+ instance_pred[batch_idx] = paddle.where(

+ instance_pred[batch_idx] == pred_inst_id,

+ paddle.to_tensor(0),

+ instance_pred[batch_idx],

+ )

+ semantic_pred[batch_idx] = paddle.where(

+ instance_pred[batch_idx] == pred_inst_id,

+ paddle.to_tensor(0),

+ semantic_pred[batch_idx],

+ )

+

+ # Ignore Void Pixels

+ instance_pred = paddle.where(void_masks, paddle.to_tensor(0), instance_pred)

+ semantic_pred = paddle.where(void_masks, paddle.to_tensor(0), semantic_pred)

+

+ # Compute metrics for each class

+ for i, class_id in enumerate(self.class_list):

+ TP = 0

+ n_preds = 0

+ n_true = 0

+ ious = []

+

+ for batch_idx in range(instance_true.shape[0]):

+ instance_mask = instance_true[batch_idx]

+ class_mask_gt = (semantic_true[batch_idx] == class_id).astype("float32")

+ class_mask_p = (semantic_pred[batch_idx] == class_id).astype("float32")

+

+ pred_class_instances = instance_pred[batch_idx] * class_mask_p

+ true_class_instances = instance_mask * class_mask_gt

+

+ n_preds += (

+ int(paddle.unique(pred_class_instances).shape[0]) - 1

+ ) # do not count 0

+ n_true += int(paddle.unique(true_class_instances).shape[0]) - 1

+

+ if n_preds == 0 or n_true == 0:

+ continue # no true positives in that case

+

+ unique_true, true_counts = paddle.unique(

+ true_class_instances, return_counts=True

+ )

+ for true_inst_id, true_inst_area in zip(unique_true, true_counts):

+ if true_inst_id == 0: # masked segments

+ continue

+

+ unique_pred, pred_counts = paddle.unique(

+ pred_class_instances, return_counts=True

+ )

+ for pred_inst_id, pred_inst_area in zip(unique_pred, pred_counts):

+ if pred_inst_id == 0:

+ continue

+ inter = (

+ (instance_mask == true_inst_id)

+ * (instance_pred[batch_idx] == pred_inst_id)

+ ).sum()

+ iou = inter.astype("float32") / (

+ true_inst_area + pred_inst_area - inter

+ ).astype("float32")

+

+ if iou > self.iou_threshold:

+ TP += 1

+ ious.append(iou)

+

+ FP = n_preds - TP

+ FN = n_true - TP

+

+ self.counts[i] += paddle.to_tensor([TP, FP, FN], dtype="float32")

+ if len(ious) > 0:

+ self.cumulative_ious[i] += paddle.stack(ious).sum()

+

+ def value(self, per_class=False):

+ TP, FP, FN = paddle.split(self.counts.astype("float32"), 3, axis=-1)

+ SQ = self.cumulative_ious / TP.squeeze()

+

+ # Handle NaN and Inf values

+ nan_mask = paddle.isnan(SQ) | paddle.isinf(SQ)

+ SQ = paddle.where(nan_mask, paddle.to_tensor(0.0), SQ)

+

+ RQ = TP / (TP + 0.5 * FP + 0.5 * FN)

+ PQ = SQ * RQ.squeeze(-1)

+

+ if per_class:

+ return SQ, RQ, PQ

+ else:

+ valid_mask = ~paddle.isnan(PQ)

+ if valid_mask.any():

+ return (

+ SQ[valid_mask].mean(),

+ RQ[valid_mask].mean(),

+ PQ[valid_mask].mean(),

+ )

+ else:

+ return (

+ paddle.to_tensor(0.0),

+ paddle.to_tensor(0.0),

+ paddle.to_tensor(0.0),

+ )

+

+ def get_table(self):

+ table = (

+ paddle.concat([self.counts, self.cumulative_ious[:, None]], axis=-1)

+ .cpu()

+ .numpy()

+ )

+ return table

diff --git a/examples/UTAE/src/panoptic/paps.py b/examples/UTAE/src/panoptic/paps.py

new file mode 100644

index 000000000..95dc331d7

--- /dev/null

+++ b/examples/UTAE/src/panoptic/paps.py

@@ -0,0 +1,503 @@

+"""

+PaPs Implementation (Paddle Version)

+Converted to PaddlePaddle

+"""

+

+import paddle

+import paddle.nn as nn

+import paddle.nn.functional as F

+from src.backbones.utae import ConvLayer

+

+

+class PaPs(nn.Layer):

+ """

+ Implementation of the Parcel-as-Points Module (PaPs) for panoptic segmentation of agricultural

+ parcels from satellite image time series.

+ Args:

+ encoder (nn.Layer): Backbone encoding network. The encoder is expected to return

+ a feature map at the same resolution as the input images and a list of feature maps